Note: Post updated on 3/29/12 to correct titles on the three T-shirt size tables and to lighten the blue shade on the Medium T-shirt size row in these tables to make it easier to read.

“We do estimation very badly in the software field. Most of our estimates are more like wishes than realistic targets.”

– Robert L. Glass, Nov 2002 – Facts and Fallacies of Software Engineering“The best way to get a project done faster is to start sooner.”

– Jim Highsmith

In earlier posts (here, here, and here), I commented briefly about moving away from upfront estimating and toward tracking and measuring “actual” metrics to complete work items, and using this data as a foundation for predictable scheduling and forecasting. Am I suggesting your software development team can’t achieve effective “predictable” scheduling using upfront estimating? No. But first, to be clear, I’m delineating upfront estimating activities from upfront analysis activities. The former is associated with predicting the number of calendar days (duration) to complete a work item and the actual hours (effort) expended on a work item. The latter is associated more with partitioning larger work items (epic, feature) into smaller ones (story, task) and determining relationships and dependencies between them to assess complexity or risk.1 The significance for decoupling these becomes apparent shortly. So, in your context, if teams haven’t achieved effective predictable scheduling using upfront estimating, I am suggesting it might be beneficial to approach project planning from what is likely a different perspective. I’m also suggesting that over time this different perspective may reduce planning effort.

In earlier posts (here, here, and here), I commented briefly about moving away from upfront estimating and toward tracking and measuring “actual” metrics to complete work items, and using this data as a foundation for predictable scheduling and forecasting. Am I suggesting your software development team can’t achieve effective “predictable” scheduling using upfront estimating? No. But first, to be clear, I’m delineating upfront estimating activities from upfront analysis activities. The former is associated with predicting the number of calendar days (duration) to complete a work item and the actual hours (effort) expended on a work item. The latter is associated more with partitioning larger work items (epic, feature) into smaller ones (story, task) and determining relationships and dependencies between them to assess complexity or risk.1 The significance for decoupling these becomes apparent shortly. So, in your context, if teams haven’t achieved effective predictable scheduling using upfront estimating, I am suggesting it might be beneficial to approach project planning from what is likely a different perspective. I’m also suggesting that over time this different perspective may reduce planning effort.

As an example, I’ll focus on one of the “actual” measurements my past teams collected, in this case Lead times for “story” work items, and discuss how we used this data to derive a very predictable range of “T-shirt” sizes. This range helped us predictably manage story level work items thru our workflow, and enabled us to identify issues earlier as they emerged for those needing alternative (special) processing or management. Before moving to how and what we did and the benefits obtained, I’ll briefly provide context for why we shifted perspective, which may reveal some insights for benefit in your environment.

Earlier Times in a Nutshell

Our development teams were well into implementing agile processes, and demonstrated visible improvements in several areas related to technical practices and our ability to deliver more frequently. However, we continually missed “commitments” for the number of stories to be completed in a sprint whether it was two weeks or 30 days. We were unsuccessful at effectively predicting duration or effort for completing stories using upfront estimating techniques that we had tried including variations of planning poker with ideal days, points, hours, Fibonacci sequences, etc. I won’t go into consequences of these estimating efforts here, but will highlight a key observation made during efforts to mitigate these costs that led to shifting our planning perspective.

As the mid-sprint approached and we saw our commitment at risk, at one point a team decided to assertively reduce (“de-commit”) work items (stories in this case) in their “Doing” state, moving them back to ToDo or to the story backlog. Again and again, when we did this in a sprint, stories remaining in the Doing state would get completed quickly, and then we’d “whip back” the other way and re-pull stories before the sprint end. After of a few of these “herky-jerky” sprints, we did something different and shifted to a “pull-scheduling” perspective.

Push vs Pull Scheduling?

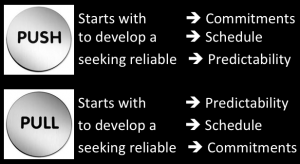

This image is my summary derived from sources contrasting push and pull scheduling systems.2 Push scheduling starts with obtaining commitments to upfront estimates of duration and effort, then develops a schedule based on these estimates and seeks predictable outcomes by attempting to adhere to the developed schedule. Pull scheduling starts with obtaining predictability of upfront (measured) capabilities and known constraints, then develops a schedule based on these capabilities and constraints and seeks to achieve commitments to the developed schedule. Is this distinction a bit too intangible for you?

This image is my summary derived from sources contrasting push and pull scheduling systems.2 Push scheduling starts with obtaining commitments to upfront estimates of duration and effort, then develops a schedule based on these estimates and seeks predictable outcomes by attempting to adhere to the developed schedule. Pull scheduling starts with obtaining predictability of upfront (measured) capabilities and known constraints, then develops a schedule based on these capabilities and constraints and seeks to achieve commitments to the developed schedule. Is this distinction a bit too intangible for you?

Seeing Pull Scheduling in Action

The changes included some made upstream related to conditioning the feature level work item backlog in the software development workflow along with establishing some work-in-progress limits.3 Downstream of the feature backlog, “policy” changes focused upfront planning on the analysis activities of partitioning a “feature” into story level work items. While the Product Owner still created some key stories upfront, now most were discovered just-in-time (JIT) with the full software development team after the feature was pulled to work on.

The initial analysis activity was time-boxed to either identify the first “batch” of 15 to 20 core stories or end after two hours. As a story was created, we did make a good faith attempt to “size” it roughly as a two to four day effort. But this “sizing effort” was mostly a simple “head-bob” exercise. We simply asked, “Does this look like two to four days?” If too few heads bobbed “yes”, a “spike” story was attached to this questionable large story, both were placed in ToDo, and any more sizing analysis was deferred until the story was pulled to be worked on. When this combination was pulled from ToDo, a deeper analysis spike activity of a day or two was performed to break down the questionable large story into a number of “more likely” two to four day stories. We clearly expected “new” stories to be discovered after work started on any of the feature’s initial “core” 15 to 20 stories. Again, the upfront activities now were focused on analysis to more fully scope, partition, and understand the feature. They were not to estimate upfront duration or effort, though I’ll touch on these two again just a bit later.

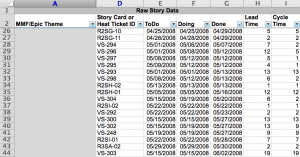

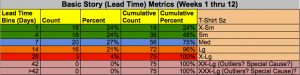

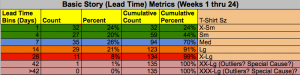

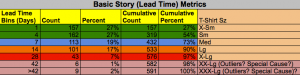

As the team worked on stories, basic metrics were collected (see spreadsheet “snippet” of actual project data, parent MMF/epic identifiers were grayed out intentionally, click image to enlarge). From these basic “actual” measurements we were able to (quickly) begin developing a number of useful models including a “T-shirt” model for expected durations to complete a story level work item. Over time, as more data was collected, this model became very stable. These images show the model at 12-weeks, 24-weeks, and then after nearly 16 months of data (see the tables below labeled Basic Story Lead Time Metrics, click images to enlarge).

As the team worked on stories, basic metrics were collected (see spreadsheet “snippet” of actual project data, parent MMF/epic identifiers were grayed out intentionally, click image to enlarge). From these basic “actual” measurements we were able to (quickly) begin developing a number of useful models including a “T-shirt” model for expected durations to complete a story level work item. Over time, as more data was collected, this model became very stable. These images show the model at 12-weeks, 24-weeks, and then after nearly 16 months of data (see the tables below labeled Basic Story Lead Time Metrics, click images to enlarge).

From these models we learned early that nearly 50% of our stories could be expected to be completed in 1 to 4 days, nearly 20%+/- in 5 to 7 days, nearly 20%+/- in 8 to 14 days, and nearly 10%+/- over 14 days. While over time we improved a bit on these performance metrics on the lower end of the duration lengths, the significant point here is early on we learned key capabilities and constraints of our software development system in our context. We were now able to effectively predict durations for completing stories and with much less effort.

Note: I’m not discussing Cycle time here but a similar distribution data table was also created showing how long it was expected to take for a work item to reach Done after it had arrived in the Doing process state of the software development workflow (development effort). However, see my earlier post here for a fuller discussion of Lead time versus Cycle time and how it might impact a discussion on duration or effort tracking. Also, the question whether to include weekends, holidays, vacations, etc. in Lead or Cycle time calculations is a different discussion in my opinion and one I wont’ get into here either.

Walking Thru the T-shirt Sizing Table

How did these tables help? First, we now began to expect and communicate to others the “typical” story (50%+/-) was a small size work item and should be done, after entering the ToDo process state in the workflow, in four calendar days. This became the team’s internal service level of agreement (SLA) and it included weekends, holidays, vacations, sick days, staff meetings, HR trainings, etc. for members of the team. The basic metrics we collected (see above spreadsheet) included all these “variables” (numerous small variations) as part of “the system” measured and reflected them in these models. Second, as a story’s duration approached 4 days, it garnered additional attention (analysis) from others on the team and in our daily stand-ups. What is slowing it down? Who might be able to help? Can it be partitioned with some portion being completed and still providing value, while the other pieces gets de-scoped, deferred, or completed later (see concept of fidelity)? 4 This 20%+/- of the “typical” stories we encountered were considered medium size work items completed typically in 5 to 7 calendar days after entering the ToDo workflow process state.

How did these tables help? First, we now began to expect and communicate to others the “typical” story (50%+/-) was a small size work item and should be done, after entering the ToDo process state in the workflow, in four calendar days. This became the team’s internal service level of agreement (SLA) and it included weekends, holidays, vacations, sick days, staff meetings, HR trainings, etc. for members of the team. The basic metrics we collected (see above spreadsheet) included all these “variables” (numerous small variations) as part of “the system” measured and reflected them in these models. Second, as a story’s duration approached 4 days, it garnered additional attention (analysis) from others on the team and in our daily stand-ups. What is slowing it down? Who might be able to help? Can it be partitioned with some portion being completed and still providing value, while the other pieces gets de-scoped, deferred, or completed later (see concept of fidelity)? 4 This 20%+/- of the “typical” stories we encountered were considered medium size work items completed typically in 5 to 7 calendar days after entering the ToDo workflow process state.

When a story reached eight days days (orange row on the table), it was considered a “large” work item and our model showed we’d expect to have 20%+/- of the stories reach this size level. This is when we ramped the analysis up yet another notch and applied other tactics beyond the standard process and practices. For example, we might have multiple pairs swarm on the story, reconsider the initial strategy or solution for the story, consider applying a set-based design or engineering approach, re-evaluate its value and priority in the light of more information about the story, defer it, or even decide to simply continue working on it “normally” understanding it more fully and accepting the “cost”, if any, of the delay. Finally, when a story reached two-plus weeks (red row on the table), it was considered an “extra-large” work item and our model showed we’d expect 10%+/- of the stories to reach this size level. These stories typically were those that had dependencies outside of the cross-functional software development team. For example, stories with dependencies on marketing, legal, external engineering or IT departments, 3rd-party tools or services, or needing approvals for purchases of tools or 3rd-party services, etc. In many cases these stories required more than additional analysis and often were issues related to systemic or organizational challenges requiring higher level management involvement to address. 5

Bringing It to a Close

As mentioned earlier, the same basic data collected above was analyzed in various ways to develop additional models (ex. other data distributions, scatter plots, run (trend) charts, etc.) that helped us visualize and understand the capabilities and constraints of the software development workflow (system) in our context. For this post I only focused on the story level work item and used actual story Lead time data in the context of T-shirt sizing as one example of applying a pull scheduling perspective. I showed how we were able to get effective predictability and reduce overall upfront planning activities using an alternative to upfront estimating that was not providing a commensurate level of value (predictability) returned for the effort expended. However, several other similar and useful distribution models (T-shirt sizing style) contributed to our ability to create more effective schedules as well. For example, models for the expected number of stories completed per specified time interval of interest (flow) such as per day, per week, two-weeks, whatever, or models for the expected number of stories completed per feature.

We in fact used these additional models to establish similar effective predictable expectations for these metrics as well. In the process, we were able to eliminate the need for a “sprint planning meeting” every two weeks. Instead, we only needed JIT planning sessions approximately every 29 to 32 calendar days, which was the measured typical duration for completing one work item from our feature level backlog. Just as we were now able to identify emerging large and extra-large stories and respond earlier to the associated issues, in a similar fashion a distribution model of story counts per feature allowed us to more effectively determine expected number of stories per feature level work item and recognize an emerging “large” feature and respond earlier to associated issues. In essence, this is pull scheduling. We had established predictability upfront based on identifying capabilities and constraints derived from “actual” metrics (measurements) of our software development workflow (system). We developed schedules (expectations) based on these capabilities and constraints. Then we worked to gain commitments to these expectations and to meet them by also using the models to help identify and enable us to respond more quickly as issues emerged rather than continue to try with little success to get better at upfront estimating (predicting the future).

Take care,

Frank

References:

1 David J. Anderson, “Estimation versus Analysis” (blog post, December 18, 2005; Note: as of July 2013, this blog post was no longer available on the original site).

2 Mary and Tom Poppendieck, Lean Software Development, (2003); Implementing Lean Software Development, (2006); Leading Lean Software Development, (2009); see push scheduling, pull scheduling, push systems, or pull systems in the index of these three books (see their web site here).

3 David J. Anderson, Kanban, (2010); foundational book on implementing concepts of limiting work-in-progress in software development context.

4 Dean Leffingwell, Scaling Software Agility, (2007); introduces and discusses the concepts of “fidelity” levels (least imaginable, minimum credible, moderate, and best) when delivering software development solutions, and “committing to the signature of a feature’s scope”, not the implementation of the feature. Both are key tools in being able to deliver software predictably in challenging contexts.

5 Dave Snowden, see Cynefin Framework (YouTube video); in our context, the Small, Medium, Large, and Extra-Large T-shirt “sizes” for story level work items were less likely to infer amounts of “tasks” to do (more dirt to shovel) but more likely to be indicative of increasing difficulty in partitioning a story or attaining functional solution desired (simple, complicated, or complex) and as “duration” increased our efforts went from standard practices to much more iterative, learning focused, and emergent ones.

Dear Frank,

Thoroughly enjoyed the analytical breakdown of Pull Scheduling, however I would like to highlight one of the side effects, Team Maturation. For years, I’ve heard developers discuss the ‘ineffectiveness of management’ to prioritize work items, schedule/promise delivery dates and guide team work efforts. The Pull system decreases the impact of management (no project manager in Scrum, right?) on the team and pressures the team to coordinate its own efforts. Without management ‘in the way’ the team is pushed into the arena of organizing its work in the most effective way of delivering. Plus, it removes the timeless excuse for failure of “Well if we had a good manager…”. For example, work items that are data-centric in nature are not pulled into production when the dba is not available or pulling work into the system that is dependent on external issues (hardware, workflow decisions, UX design, etc.) that have not been finalized. In addition, the organization of team members is performed by the team itself. This is the area where management usually commits teams without being aware of former commitments, scheduled/unscheduled time off, closing previous project work, individual training, etc.

Team maturity can rely on several factors such as cohesion (how long working together), tech skills of team members, proper domain experience (subject matter experts) and the willingness to challenge each other in the face of behaviors not agreed upon as acceptable by the team. When work needs to be managed by the team itself, the rate of work pulled into the system is almost perfectly balanced with the capacity to reasonably deliver work in a timely fashion. This system truly ratchets up team commitment, which further strengthens cohesion with more practice and success. Its one thing for someone to commit you to a schedule, but the level of commitment increases substantially when a team pulls a work item and personally (and collectively) commits to completing it…